You

can download existing and new content for ASP.NET, Office, SQL Server,

Windows Azure, SharePoint Server and other Microsoft technologies in

e-book formats. Reference, guide, and step-by-step information are all

available. All the e-books are free. New books will be posted as they

become available.

Tuesday, 21 August 2012

Sunday, 19 August 2012

SSIS – Transformations

SSIS supports numerous transformations that allow you to combine data

originating from multiple sources, cleanse the data and give it the

shape your data destination expects. Then you can import the data into a

single or multiple destinations.

| Transformation | Description | Examples of when Transformation Would be Used |

|---|---|---|

| Aggregate | Calculates aggregations such as SUM, COUNT, AVG, MIN and MAX based on the values of a given numeric column. This transformation produces additional output records. | Adding aggregated information to your output. This can be useful for adding totals and sub-totals to your output. |

| Audit | Includes auditing information, such as computer name where the package runs, package version ID, task name, etc in the data flow. | Creates advanced logs which indicate where and when the package was executed, how long it took to run the package and the outcome of execution. |

| Character Map | Performs minor manipulations on string columns. Converts all letters to uppercase, lowercase, reverse bytes, etc. | Applying string manipulations prior to loading data into the data warehouse. You can also apply the same manipulations to the data while it is being loaded into the warehouse. |

| Conditional Split | Accepts an input and determines which destination to pipe the data into based on the result of an expression. | Cleansing the data to extract specific rows from the source. If a specific column does not conform to the predefined format (perhaps it has leading spaces or zeros), move such records to the error file. |

| Copy Column | Makes a copy of a single or multiple columns which will be further transformed by subsequent tasks in the package. | Extracting columns that need to be cleansed of leading / trailing spaces, applying character map transformation to uppercase all data and then load it into the table. |

| Data Conversion | Converts input columns from one data type to another. | Converting columns extracted from the data source to the proper data type expected by the data warehouse. Having such transformation options allows us the freedom of moving data directly from its source into the destination without having an intermediary staging database. |

| Data Mining Query | Queries a data mining model. Includes a query builder to assist you with development of Data Mining eXpressions (DMX) prediction queries. | Evaluating the input data set against a data mining model developed with Analysis Services. |

| Derived Column | Calculates new column value based on an existing column or multiple columns. | Removing leading and trailing spaces from a column. Add title of courtesy (Mr., Mrs., Dr, etc) to the name. |

| Export Column | Exports contents of large columns (TEXT, NTEXT, IMAGE data types) into files. | Saving large strings or images into files while moving the rest of the columns into a transactional database or data warehouse. |

| Fuzzy Grouping | Finds close or exact matches between multiple rows in the data source. Adds columns to the output including the values and similarity scores. | Cleansing data by translating various versions of the same value to a common identifier. For example, “Dr”, “Dr.”, “doctor”, “M.D.” should all be considered equivalent. |

| Fuzzy Lookup | Compares values in the input data source rows to values in the lookup table. Finds the exact matches as well as those values that are similar. | Cleansing data by translating various versions of the same value to a common identifier. For example, “Dr”, “Dr.”, “doctor”, “M.D.” should all be considered equivalent. |

| Import Column | Imports contents of a file and appends to the output. Can be used to append TEXT, NTEXT and IMAGE data columns to the input obtained from a separate data source. | This transformation could be useful for web content developers. For example, suppose you offer college courses online. Normalized course meta-data, such as course_id, name, and description is stored in a typical relational table. Unstructured course meta-data, on the other hand, is stored in XML files. You can use Import Column transformation to add XML meta-data to a text column in your course table. |

| Lookup | Joins the input data set to the reference table, view or row set created by a SQL statement to lookup corresponding values. If some rows in the input data do not have corresponding rows in the lookup table then you must redirect such rows to a different output. | Obtaining additional data columns. For example, the majority of employee demographic information might be available in a flat file, but other data such as department where each employee works, their employment start date and job grade might be available from a table in relational database. |

| Merge | Merges two sorted inputs into a single output based on the values of the key columns in each data set. Merged columns must have either identical or compatible data types. For example you can merge VARCHAR(30) and VARCHAR(50) columns. You cannot merge INT and DATETIME columns. | Combining the columns from multiple data sources into a single row set prior to populating a dimension table in a data warehouse. Using Merge transformation saves the step of having a temporary staging area. With prior versions of SQL Server you had to populate the staging area first if your data warehouse had multiple transactional data sources. |

| Merge Join | Joins two sorted inputs using INNER JOIN, LEFT OUTER JOIN or FULL OUTER JOIN algorithm. You can specify columns used for joining inputs. | Combining the columns from multiple data sources into a single row set prior to populating a dimension table in a data warehouse. Using Merge Join transformation saves the step of having a temporary staging area. With prior versions of SQL Server you had to populate the staging area first if your data warehouse had multiple transactional data sources. Note that Merge and Merge Join transformations can only combine two data sets at a time. However, you could use multiple Merge Join transformations to include additional data sets. |

| Multicast | Similar to the conditional split transformation, but the entire data set is piped to multiple destinations. | Populating the relational warehouse as well as the source file with the output of a derived column transformation. |

| OLEDB Command | Runs a SQL command for each input data row. Normally your SQL statement will include a parameter (denoted by the question mark), for example: UPDATE employee_source SET has_been_loaded=1 WHERE employee_id=? | Setting the value of a column with BIT data type (perhaps called “has_been_loaded”) to 1 after the data row has been loaded into the warehouse. This way the subsequent loads will only attempt importing the rows that haven’t made it to the warehouse as of yet. |

| Percentage Sampling | Loads only a subset of your data, defined as the percentage of all rows in the data source. Note that rows are chosen randomly. | Limiting the data set during development phases of your project. Your data sources might contain billions of rows. Processing cubes against the entire data set can be prohibitively lengthy. If you’re simply trying to ensure that your warehouse functions properly and data values on transactional reports match the values obtained from your Analysis Services cubes you might wish to only load a subset of data into your cubes. |

| Pivot | Pivots the normalized data set by certain column to create a more easily readable output. Similar to PIVOT command in Transact-SQL. You can think of this transformation as converting rows into columns. For example if your input rows have customer, account number and account balance columns the output will have the customer and one column for each account. | Creating a row set that displays the table data in a more user-friendly format. The data set could be consumed by a web service or could be distributed to users through email. |

| Row count | Counts the number of transformed rows and store in a variable. | Determining the total size of your data set. You could also execute a different set of tasks based on the number of rows you have transformed. For example, if you increase the number of rows in your fact table by 5% you could perform no maintenance. If you increase the size of the table by 50% you might wish to rebuild the clustered index. |

| Row sampling | Loads only a subset of your data, defined as the number of rows. Note that rows are chosen randomly. | Limiting the data set during development phases of your project. Your data warehouse might contain billions of rows. Processing cubes against the entire data set can be prohibitively lengthy. If you’re simply trying to ensure that your warehouse functions properly and data values on transactional reports match the values obtained from your Analysis Services cubes you might wish to only load a subset of data into your cubes. |

| Script Component | Every data flow consists of three main components: source, destination and transformation. Script Component allows you to write transformations for otherwise un-supported source and destination file formats. Script component also allows you to perform transformations not directly available through the built-in transformation algorithms. | Custom transformations can call functions in managed assemblies,

including .NET framework. This type of transformation can be used when

the data source (or destination) file format cannot be managed by

typical connection managers. For example, some log files might not have

tabular data structures. At times you might also need to parse strings

one character at a time to import only the needed data elements. Much like Script Task the Script Component transformation must be written using Visual Basic .NET. |

| Slowly Changing Dimension | Maintains historical values of the dimension members when new members are introduced. | Useful for maintaining dimension tables in a data warehouse when maintaining historical dimension member values is necessary. |

| Sort | Sorts input by column values. You can sort the input by multiple columns in either ascending or descending order. The transformation also allows you to specify the precedence of columns used for sorting. This transformation could also discard the rows with duplicate sort values. | Ordering the data prior to loading it into a data warehouse. This could be useful if you’re ordering your dimension by member name values as opposed to sorting by member keys. You can also use Sort transformation prior to feeding the data as the input to the Merge Join or Merge transformation. |

| Term Extraction | Extracts terms (nouns and noun phrases) from the input text into the transformation output column. | Processing large text data and extracting main concepts. For example, you could extract the primary terms used in this section of SQLServerPedia by feeding the Term Extraction transformation the text column containing the entire section. |

| Term Lookup | Extracts terms from the input column with TEXT data type and match them with same or similar terms found in the lookup table. Each term found in the lookup table is scanned for in the input column. If the term is found the transformation returns the value as well as the number of times it occurs in the row. You can configure this transformation to perform case-sensitive search. | Analyzing large textual data for specific terms. For example, suppose you accept email feedback for latest version of your software. You might not have time to read through every single email messages that comes to the generic inbox. Instead you could use this task to look for specific terms of interest. |

| Union ALL | Combines multiple inputs into a single output. Rows are sorted in the order they’re added to the transformation. You can ignore some columns from each output, but each output column must be mapped to at least one input column. | Import data from multiple disparate data sources into a single destination. For example, you could extract data from mail system, text file, Excel spreadsheet and Access database and populate a SQL Server table. Unlike Merge and Merge Join transformations Union ALL can accept more than two inputs. |

| Unpivot | Opposite of Pivot transformation, Unpivot coverts columns into rows.

It normalizes the input data set that has many duplicate values in

multiple columns by creating multiple rows that have the same value in a

single column. For example if your input has a customer name and a separate column for checking and savings’ accounts Unpivot can transform it into a row set that has customer, account and account balance columns. |

Massaging a semi-structured input data file and convert it into a normalized input prior to loading data into a warehouse. |

Friday, 17 August 2012

Regular Expressions in SSIS

Case

I want to use a Regular Expression to filter incorrect emailaddresses in SSIS.

Solution

There are a couple of options:

first_name last_name email_address cell_phone address_1 NK coupon test

suman kanam suman@gmail.com 123455 123456 1w23e4 1w23e4 1

venkat budati budati@gmail.com 123456 1w23e sdfffldfsj seljsek 2

sss sss ss@.com 123456 12964sdfgh fghjk dfghj 3

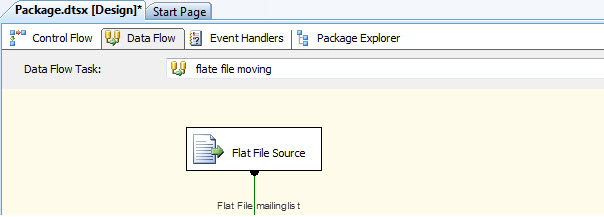

1) Add source

Add a Flat File Source Component in your dataflow for the file example above.

I want to use a Regular Expression to filter incorrect emailaddresses in SSIS.

Solution

There are a couple of options:

- Microsoft built a SSIS Sample Component for regex, but it's only available for 2005.

- Konesans built a RegexClean Transformation and a Regular Expression Transformation.

- There is also an open source project named RegExtractor SSIS Component.

- And you can even do it with Regular Expressions in T-SQL.

- But you can also do it your self with a Script Component Transformation

first_name last_name email_address cell_phone address_1 NK coupon test

suman kanam suman@gmail.com 123455 123456 1w23e4 1w23e4 1

venkat budati budati@gmail.com 123456 1w23e sdfffldfsj seljsek 2

sss sss ss@.com 123456 12964sdfgh fghjk dfghj 3

1) Add source

Add a Flat File Source Component in your dataflow for the file example above.

2) Add Script Component

Add a Script Component, type transformation, after your Flat File Source Component.

3) Input Columns

Edit the Script Component and select the email address column as an input column (readonly).

Input Columns tab

4) Outputs

Change the name of the default output port to CorrectEmail and set the

ExclusionGroup to 1. Add an extraoutput port for the IncorrectEmail and

set the ExclusionGroup to 1. Also select the input port as the

SynchronousInputID. Now you have two output ports. One for the correct

and one for the incorrect email address.

Inputs and Outputs tab

5) The Script

Hit the Edit Script button to open the VSTA editor. SSIS creates some standard methods, but we only use Input0_ProcessInputRow.

You can remove the rest of the methods. We are also adding a new method

that validates the email address. It uses a .Net Regex method. You can

search the web for your own regex pattern and change it in the extra

method.

// C# code

using System;

using System.Data;

using Microsoft.SqlServer.Dts.Pipeline.Wrapper;

using Microsoft.SqlServer.Dts.Runtime.Wrapper;

using System.Text.RegularExpressions;

[Microsoft.SqlServer.Dts.Pipeline.SSISScriptComponentEntryPointAttribute]

public class ScriptMain : UserComponent

{

public override void PreExecute()

{

base.PreExecute();

/*

Add your code here for preprocessing or remove if not needed

*/

}

public override void PostExecute()

{

base.PostExecute();

/*

Add your code here for postprocessing or remove if not needed

You can set read/write variables here, for example:

Variables.MyIntVar = 100

*/

}

public override void Input0_ProcessInputRow(Input0Buffer Row)

{

// Validate the email address

if (IsCorrectEmail(Row.emailaddress))

{

// Redirect the correct email address

Row.DirectRowToCorrectEmail();

}

else

{

// Redirect the incorrect email address

Row.DirectRowToInCorrectEmail();

}

}

public bool IsCorrectEmail(String emailAddress)

{

// The pattern for email

string emailAddressPattern = @"^(([^<>()[\]\\.,;:\s@\""]+"

+ @"(\.[^<>()[\]\\.,;:\s@\""]+)*)|(\"".+\""))@"

+ @"((\[[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}"

+ @"\.[0-9]{1,3}\])|(([a-zA-Z\-0-9]+\.)+"

+ @"[a-zA-Z]{2,}))$";

// Create a regex object with the pattern

Regex emailAddressRegex = new Regex(emailAddressPattern);

// Check if it is match and return that value (boolean)

return emailAddressRegex.IsMatch(emailAddress);

}

}

//VB.Net code (SSIS 2008)Imports SystemImports System.DataImports System.MathImports System.Text.RegularExpressions ' AddedImports Microsoft.SqlServer.Dts.Pipeline.WrapperImports Microsoft.SqlServer.Dts.Runtime.Wrapper<microsoft.sqlserver.dts.pipeline.ssisscriptcomponententrypointattribute> _<clscompliant(false)> _Public Class ScriptMain Inherits UserComponent ' Method that will be executed for each row. Public Overrides Sub Input0_ProcessInputRow(ByVal Row As Input0Buffer) ' Validate the email address If (IsCorrectEmail(Row.Email)) Then ' Redirect the correct email address Row.DirectRowToCorrectEmail() Else ' Redirect the incorrect email address Row.DirectRowToIncorrectEmail() End If End Sub ' A boolean method that validates an email address ' with a regex pattern. Public Function IsCorrectEmail(ByVal emailAddress As String) As Boolean ' The pattern for email Dim emailAddressPattern As String = "^(([^<>()[\]\\.,;:\s@\""]+" + _ "(\.[^<>()[\]\\.,;:\s@\""]+)*)|(\"".+\""))@" + _ "((\[[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}" + _ "\.[0-9]{1,3}\])|(([a-zA-Z\-0-9]+\.)+" + _ "[a-zA-Z]{2,}))$" ' Create a regex object with the pattern Dim emailAddressRegex As Regex = New Regex(emailAddressPattern) ' Check if it is match and return that value (boolean) Return emailAddressRegex.IsMatch(emailAddress) End FunctionEnd Class</clscompliant(false)></microsoft.sqlserver.dts.pipeline.ssisscriptcomponententrypointattribute>

6) The result

For testing purposes I added two Derived Columns with data viewes on the Data Flow Paths. vbudati,alias SSIS Junkie, has an other example in VB.net available. And you can also use Regular Expression to clean column values. More about that is described in this article.

thanks

venkat.budati

Wednesday, 15 August 2012

SSIS, SSAS and SSRS

SSIS - SQL Server Integration Services

Q: What is SSIS? How it related with SQL Server.SQL Server Integration Services (SSIS) is a component of SQL Server which can be used to perform a wide range of Data Migration and ETL operations. SSIS is a component in MSBI process of SQL Server.

This is a platform for Integration and Workflow applications. It is known for a fast and flexible OLTP and OLAP extensions used for data extraction, transformation, and loading (ETL). The tool may also be used to automate maintenance of SQL Server databases and multidimensional data sets.

Q: What are the tools associated with SSIS?

We use Business Intelligence Development Studio (BIDS) and SQL Server Management Studio (SSMS) to work with Development of SSIS Projects.

We use SSMS to manage the SSIS Packages and Projects.

Q: What are the differences between DTS and SSIS

Data Transformation Services |

SQL Server Integration Services |

Limited Error Handling |

Complex and powerful Error Handling |

Message Boxes in ActiveX Scripts |

Message Boxes in .NET Scripting |

No Deployment Wizard |

Interactive Deployment Wizard |

Limited Set of Transformation |

Good number of Transformations |

NO BI functionality |

Complete BI Integration |

Q: What is a workflow in SSIS ?

Workflow is a set of instructions on to specify the Program Executor on how to execute tasks and containers within SSIS Packages.

Q: What is the control flow?

A control flow consists of one or more tasks and containers that execute when the package runs. To control order or define the conditions for running the next task or container in the package control flow, we use precedence constraints to connect the tasks and containers in a package. A subset of tasks and containers can also be grouped and run repeatedly as a unit within the package control flow. SQL Server 2005 Integration Services (SSIS) provides three different types of control flow elements: Containers that provide structures in packages, Tasks that provide functionality, and Precedence Constraints that connect the executables, containers, and tasks into an ordered control flow.

Q: What is a data flow?

A data flow consists of the sources and destinations that extract and load data, the transformations that modify and extend data, and the paths that link sources, transformations, and destinations The Data Flow task is the executable within the SSIS package that creates, orders, and runs the data flow. A separate instance of the data flow engine is opened for each Data Flow task in a package. Data Sources, Transformations, and Data Destinations are the three important categories in the Data Flow.

Q: How does Error-Handling work in SSIS

When a data flow component applies a transformation to column data, extracts data from sources, or loads data into destinations, errors can occur. Errors frequently occur because of unexpected data values.

Type of typical Errors in SSIS:

-Data Connection Errors, which occur incase the connection manager cannot be initialized with the connection string. This applies to both Data Sources and Data Destinations along with Control Flows that use the Connection Strings.

-Data Transformation Errors, which occur while data is being transformed over a Data Pipeline from Source to Destination.

-Expression Evaluation errors, which occur if expressions that are evaluated at run time perform invalid

Q: What is environment variable in SSIS?

An environment variable configuration sets a package property equal to the value in an environment variable.

Environmental configurations are useful for configuring properties that are dependent on the computer that is executing the package.

Q: What are the Transformations available in SSIS?

AGGEGATE - It applies aggregate functions to Record Sets to produce new output records from aggregated values.

AUDIT - Adds Package and Task level Metadata - such as Machine Name, Execution Instance, Package Name, Package ID, etc..

CHARACTER MAP - Performs SQL Server level makes string data changes such as changing data from lower case to upper case.

CONDITIONAL SPLIT – Separates available input into separate output pipelines based on Boolean Expressions configured for each output.

COPY COLUMN - Add a copy of column to the output we can later transform the copy keeping the original for auditing.

DATA CONVERSION - Converts columns data types from one to another type. It stands for Explicit Column Conversion.

DATA MINING QUERY – Used to perform data mining query against analysis services and manage Predictions Graphs and Controls.

DERIVED COLUMN - Create a new (computed) column from given expressions.

EXPORT COLUMN – Used to export a Image specific column from the database to a flat file.

FUZZY GROUPING – Used for data cleansing by finding rows that are likely duplicates.

FUZZY LOOKUP - Used for Pattern Matching and Ranking based on fuzzy logic.

IMPORT COLUMN - Reads image specific column from database onto a flat file.

LOOKUP - Performs the lookup (searching) of a given reference object set against a data source. It is used for exact matches only.

MERGE - Merges two sorted data sets into a single data set into a single data flow.

MERGE JOIN - Merges two data sets into a single dataset using a join junction.

MULTI CAST - Sends a copy of supplied Data Source onto multiple Destinations.

ROW COUNT - Stores the resulting row count from the data flow / transformation into a variable.

ROW SAMPLING - Captures sample data by using a row count of the total rows in dataflow specified by rows or percentage.

UNION ALL - Merge multiple data sets into a single dataset.

PIVOT – Used for Normalization of data sources to reduce analomolies by converting rows into columns

UNPIVOT – Used for demoralizing the data structure by converts columns into rows incase of building Data Warehouses.

Q: How to log SSIS Executions?

SSIS includes logging features that write log entries when run-time events occur and can also write custom messages. This is not enabled by default. Integration Services supports a diverse set of log providers, and gives you the ability to create custom log providers. The Integration Services log providers can write log entries to text files, SQL Server Profiler, SQL Server, Windows Event Log, or XML files. Logs are associated with packages and are configured at the package level. Each task or container in a package can log information to any package log. The tasks and containers in a package can be enabled for logging even if the package itself is not.

Q: How do you deploy SSIS packages.

BUILDing SSIS Projects provides a Deployment Manifest File. We need to run the manifest file and decide whether to deploy this onto File System or onto SQL Server [ msdb]. SQL Server Deployment is very faster and more secure then File System Deployment. Alternatively, we can also import the package from SSMS from File System or SQ Server.

Q: What are variables and what is variable scope ?

Variables store values that a SSIS package and its containers, tasks, and event handlers can use at run time. The scripts in the Script task and the Script component can also use variables. The precedence constraints that sequence tasks and containers into a workflow can use variables when their constraint definitions include expressions. Integration Services supports two types of variables: user-defined variables and system variables. User-defined variables are defined by package developers, and system variables are defined by Integration Services. You can create as many user-defined variables as a package requires, but you cannot create additional system variables.

Q: Can you name five of the Perfmon counters for SSIS and the value they provide?

- SQLServer:SSIS Service

- SSIS Package Instances

- SQLServer:SSIS Pipeline

- BLOB bytes read

- BLOB bytes written

- BLOB files in use

- Buffer memory

- Buffers in use

- Buffers spooled

- Flat buffer memory

- Flat buffers in use

- Private buffer memory

- Private buffers in use

- Rows read

- Rows written

SSAS - SQL Server Analysis Services

Q: What is Analysis Services? List out the features?Microsoft SQL Server 2005 Analysis Services (SSAS) delivers online analytical processing (OLAP) and data mining functionality for business intelligence applications. Analysis Services supports OLAP by letting we design, create, and manage multidimensional structures that contain data aggregated from other data sources, such as relational databases. For data mining applications, Analysis Services lets we design, create, and visualize data mining models that are constructed from other data sources by using a wide variety of industry-standard

data mining algorithms.

Analysis Services is a middle tier server for analytical processing, OLAP, and Data mining. It manages multidimensional cubes of data and provides access to heaps of information including aggregation of data. One can create data mining models from data sources and use it for Business Intelligence also including reporting features.

Analysis service provides a combined view of the data used in OLAP or Data mining. Services here refer to OLAP, Data mining. Analysis services assists in creating, designing and managing multidimensional structures containing data from varied sources. It provides a wide array of data mining algorithms for specific trends and needs.

Some of the key features are:

- Ease of use with a lot of wizards and designers.

- Flexible data model creation and management

- Scalable architecture to handle OLAP

- Provides integration of administration tools, data sources, security, caching, and reporting etc.

- Provides extensive support for custom applications

The role of a Unified Dimensional Model (UDM) is to provide a bridge between the user and the data sources. A UDM is constructed over one or more physical data sources, and then the end user issues queries against the UDM using one of a variety of client tools, such as Microsoft Excel. At a minimum, when the UDM is constructed merely as a thin layer over the data source, the advantages to the end user are a simpler, more readily understood model of the data, isolation from heterogeneous backend data sources, and improved performance for summary type queries. In some scenarios a simple UDM like this is constructed totally automatically. With greater investment in the construction of the UDM, additional benefits accrue from the richness of metadata that the model can provide.

The UDM provides the following benefits:

• Allows the user model to be greatly enriched.

• Provides high performance queries supporting interactive analysis, even over huge data volumes.

• Allows business rules to be captured in the model to support richer analysis.

Q: What is the need for SSAS component?

- Analysis Services is the only component in SQL Server using which we can perform Analysis and Forecast operations.

- SSAS is very easy to use and interactive.

- Faster Analysis and Troubleshooting.

- Ability to create and manage Data warehouses.

- Apply efficient Security Principles.

- SSAS uses both server and client components to supply OLAP and data mining functionality BI Applications.

- The server component is implemented as a Microsoft Windows service. Each instance of Analysis Services implemented as a separate instance of the Windows service.

- Clients communicate with Analysis Services using the standard the XMLA (XML For Analysis) , protocol for issuing commands and receiving responses, exposed as a web service.

- An OLAP Engine is used for enabling fast ad hoc queries by end users. A user can interactively explore data by drilling, slicing or pivoting.

- Drilling refers to the process of exploring details of the data.

- Slicing refers to the process of placing data in rows and columns.

- Pivoting refers to switching categories of data between rows and columns.

- In OLAP, we will be using what are called as Dimensional Databases.

A database is called a OLAP Database if the database satisfies the FASMI rules :

- Fast Analysis– is defined in the OLAP scenario in five seconds or less.

- Shared – Must support access to data by many users in the factors of Sensitivity and Write Backs.

- Multidimensional – The data inside the OLAP Database must be multidimensional in structure.

- Information – The OLAP database Must support large volumes of data..

- Structured Query Language (SQL)

- Multidimensional Expressions (MDX) - an industry standard query language orientated towards analysis

- Data Mining Extensions (DMX) - an industry standard query language oriented toward data mining.

- Analysis Services Scripting Language (ASSL) - used to manage Analysis Services database objects.

- Cubes are multidimensional models that store data from one or more sources.

- Cubes can also store aggregations

- SSAS Cubes are created using the Cube Wizard.

- We also build Dimensions when creating Cubes.

- Cubes can see only the DSV( logical View).

The difference between a derived measure and a calculated measure is when the calculation is performed. A derived measure is calculated before aggregations are created, and the values of the derived measure are stored in the cube. A calculated measure is calculated after aggregations are created, and the values of a calculated measure aren’t stored in the cube. The primary criterion for choosing between a derived measure and a calculated measure is not efficiency, but accuracy.

Q: What is a partition?

A partition in Analysis Services is the physical location of stored cube data. Every cube has at least one partition by default. Each time we create a measure group, another partition is created. Queries run faster against a partitioned cube because Analysis Services only needs to read data from the partitions that contain the answers to the queries. Queries run even faster when partition also stores aggregations, the pre calculated totals for additive measures. Partitions are a powerful and flexible means of managing cubes, especially large cubes.

Q: While creating a new calculated member in a cube what is the use of property

called non-empty behavior?

Nonempty behavior is important property for ratio calculations. If the denominator Is empty, an MDX expression will return an error just as it would if the denominator Were equal to zero. By selecting one or more measures for the Non-Empty Behavior property, we are establishing a requirement that each selected measure first be evaluated before the calculation expression is evaluated. If each selected measure is empty, then The expression is also treated as empty and no error is returned.

Q: What is a RAGGED hierarchy?

Under normal circumstances, each level in a hierarchy in Microsoft SQL Server 2005 Analysis Services (SSAS) has the same number of members above it as any other member at the same level. In a ragged hierarchy, the logical parent member of at least one member is not in the level immediately above the member. When this occurs, the hierarchy descends to different levels for different drilldown paths. Expanding through every level for every drilldown path is then unnecessarily complicated.

Q: What are the roles of an Analysis Services Information Worker?

The role of an Analysis Services information worker is the traditional "domain expert" role in business intelligence (BI) someone who understands the data employed by a solution and is able to translate the data into business information. The role of an Analysis Services information worker often has one of the following job titles: Business Analyst (Report Consumer), Manager (Report Consumer), Technical Trainer, Help Desk/Operation, or Network Administrator.

Q: What are the different ways of creating Aggregations?

We can create aggregations for faster MDX statements using Aggregation Wizard or thru UBO – Usage Based Optimizations. Always, prefer UBO method in realtime performance troubleshooting.

Q: What is WriteBack? What are the pre-conditions?

The Enable/Disable Writeback dialog box enables or disables writeback for a measure group in a cube. Enabling writeback on a measure group defines a writeback partition and creates a writeback table for that measure group. Disabling writeback on a measure group removes the writeback partition but does not delete the writeback table, to avoid unanticipated data loss.

Q: What is processing?

Processing is a critical and resource intensive operation in the data warehouse lifecycle and needs to be carefully optimized and executed. Analysis Services 2005 offers a high performance and scalable processing architecture with a comprehensive set of controls for database administrators.

We can process an OLAP database, individual cube, Dimension or a specific Partition in a cube.

We can create aggregations for faster MDX statements using Aggregation Wizard or thru UBO – Usage Based Optimizations. Always, prefer UBO method in realtime performance troubleshooting.

Q: What is WriteBack? What are the pre-conditions?

The Enable/Disable Writeback dialog box enables or disables writeback for a measure group in a cube. Enabling writeback on a measure group defines a writeback partition and creates a writeback table for that measure group. Disabling writeback on a measure group removes the writeback partition but does not delete the writeback table, to avoid unanticipated data loss.

Q: What is processing?

Processing is a critical and resource intensive operation in the data warehouse lifecycle and needs to be carefully optimized and executed. Analysis Services 2005 offers a high performance and scalable processing architecture with a comprehensive set of controls for database administrators.

We can process an OLAP database, individual cube, Dimension or a specific Partition in a cube.

Q: Name few Business Analysis Enhancements for SSAS?

The following table lists the business intelligence enhancements that are available in Microsoft SQL Server 2005 Analysis Services (SSAS). The table also shows the cube or dimension to which each business intelligence enhancement applies, and indicates whether an enhancement can be applied to an object that was created without using a data source and for which no schema has been generated.

| Enhancement | Type | Applied to | No data source |

| Time Intelligence | Cube | Cube | No |

| Account Intelligence | Dimension | Dimension or cube | No |

| Dimension Intelligence | Dimension | Dimension or cube | Yes |

| Custom Aggregation | Dimension | Dimension (unary operator) or cube | No |

| Semiadditive Behavior | Cube | Cube | Yes> |

| Custom Member Formula | Dimension | Dimension or cube | No |

| Custom Sorting and Uniqueness Settings | Dimension | Dimension or cube | Yes |

| Dimension Writeback | Dimension | Dimension or cube | Yes |

Q: What MDX functions do you most commonly use?

This is a great question because you only know this answer by experience. If you ask me this question, the answer practically rushes out of me. “CrossJoin, Descendants, and NonEmpty, in addition to Sum, Count, and Aggregate. My personal favorite is CrossJoin because it allows me identify non-contiguous slices of the cube and aggregate even though those cube cells don’t roll up to a natural ancestor.” Indeed, CrossJoin has easily been my bread and butter.

Q: Where do you put calculated members?

The reflexive answer is “in the Measures dimension” but this is the obvious answer. So I always follow up with another question. “If you want to create a calculated member that intersects all measures, where do you put it?” A high percentage of candidates can’t answer this question, and the answer is “In a dimension other than Measures.” If they can answer it, I immediately ask them why. The answer is “Because a member in a dimension cannot intersect its own relatives in that dimension.”

Q: How do I find the bottom 10 customers with the lowest sales in 2003 that were not null?

A: Simply using bottomcount will return customers with null sales. You will have to combine it with NONEMPTY or FILTER.

SELECT { [Measures].[Internet Sales Amount] } ON COLUMNS ,

BOTTOMCOUNT(

NONEMPTY(DESCENDANTS( [Customer].[Customer Geography].[All Customers]

, [Customer].[Customer Geography].[Customer] )

, ( [Measures].[Internet Sales Amount] ) )

, 10

, ( [Measures].[Internet Sales Amount] )

) ON ROWS

FROM [Adventure Works]

WHERE ( [Date].[Calendar].[Calendar Year].&[2003] ) ;

Q: How in MDX query can I get top 3 sales years based on order quantity?

By default Analysis Services returns members in an order specified during attribute design. Attribute properties that define ordering are "OrderBy" and "OrderByAttribute". Lets say we want to see order counts for each year. In Adventure Works MDX query would be:

SELECT {[Measures].[Reseller Order Quantity]} ON 0

, [Date].[Calendar].[Calendar Year].Members ON 1

FROM [Adventure Works];

Same query using TopCount:

SELECT

{[Measures].[Reseller Order Quantity]} ON 0,

TopCount([Date].[Calendar].[Calendar Year].Members,3, [Measures].[Reseller Order Quantity]) ON 1

FROM [Adventure Works];

Q: How do you extract first tuple from the set?

Use could usefunction Set.Item(0)

Example:

SELECT {{[Date].[Calendar].[Calendar Year].Members

}.Item(0)}

ON 0

FROM [Adventure Works]

Q: How can I setup default dimension member in Calculation script?

You can use ALTER CUBE statement. Syntax:

ALTER CUBE CurrentCube | YourCubeName UPDATE DIMENSION , DEFAULT_MEMBER='';

1. When is the UPDATE_STATISTICS command used?

- When the processing of large data is done, this command is used.

- Whenever large number of deletions, modification or copy takes place into the tables, the indexes need to be updated to take care of these changes. UPDATE_STATISTICS performs this job.

- Whenever large number of deletions, modification or copy takes place into the tables, the indexes need to be updated to take care of these changes. UPDATE_STATISTICS performs this job.

2. Differentiate between a HAVING CLAUSE and a WHERE CLAUSE.

HAVING CLAUSE

- HAVING CLAUSE is used only with the SELECT statement.

- It is generally used in a GROUP BY clause in a query.

- If GROUP BY is not used, HAVING works like a WHERE clause.

- It is generally used in a GROUP BY clause in a query.

- If GROUP BY is not used, HAVING works like a WHERE clause.

WHERE Clause

- It is applied to each row before they become a part of the GROUP BY function in a query.

3. What do you understand by a view? What does the WITH CHECK OPTION clause for a view do?

- A view is a virtual table that consists of fields from one or more real tables.

- It is usually used to join multiple tables and get the data.

- The WITH CHECK OPTION for a view prevents any modification to the data that does not confirm to the WHERE clause of the view definition.

- This allows the data belonging to the view to be updated through the view.

- It is usually used to join multiple tables and get the data.

- The WITH CHECK OPTION for a view prevents any modification to the data that does not confirm to the WHERE clause of the view definition.

- This allows the data belonging to the view to be updated through the view.

4. Explain query execution plan?

- The optimizer available in SQL Server optimizes the code to be effectively executed.

- A query execution plan shows how this optimizer would run the query.

- Query execution plan can be viewed by :

- Using the Show Execution Plan option available in Query Analyzer,

- Displaying Estimated Execution Plan on the query dropdown menu,

- Use the SET SHOWPLAN_TEXT ON command before running a query and capturing the execution plan event in a SQL Server Profiler trace.

- A query execution plan shows how this optimizer would run the query.

- Query execution plan can be viewed by :

- Using the Show Execution Plan option available in Query Analyzer,

- Displaying Estimated Execution Plan on the query dropdown menu,

- Use the SET SHOWPLAN_TEXT ON command before running a query and capturing the execution plan event in a SQL Server Profiler trace.

5. What is the function of SQL Server Agent Windows service?

- It is a Windows service which handles the tasks scheduled within the SQL Server environment. These tasks are also called as job and are stored with in SQL server. The jobs may run through a trigger, a predefined schedule or on demand.

- This service is very useful in determining why a particular job did not run as intended.

- This service is very useful in determining why a particular job did not run as intended.

6. Comment on Transactions.

- Using transactions we can group all SQL commands into a single unit.

- The transaction begins with some task and finishes only when all tasks within it are over.

- The transaction gets over successfully only when all commands in it are successfully over. Even if one command fails, the whole transaction fails.

- The BEGIN TRANSACTION, ROLLBACK TRANSACTION, and COMMIT TRANSACTION statements are used to work with transactions.

- A group of tasks starts with the begin statement.

- In case of any problem, the rollback command is executed to abort the transaction.

- If all the tasks run successfully, all commands are executed through commit statement.

- The transaction begins with some task and finishes only when all tasks within it are over.

- The transaction gets over successfully only when all commands in it are successfully over. Even if one command fails, the whole transaction fails.

- The BEGIN TRANSACTION, ROLLBACK TRANSACTION, and COMMIT TRANSACTION statements are used to work with transactions.

- A group of tasks starts with the begin statement.

- In case of any problem, the rollback command is executed to abort the transaction.

- If all the tasks run successfully, all commands are executed through commit statement.

7. Differentiate between a primary key and a unique key.

- By default, clustered index on the column are created by the primary key whereas nonclustered index are created by unique key.

- Primary key doesn't allow NULLs, but unique key allows one NULL.

- Primary key doesn't allow NULLs, but unique key allows one NULL.

8. What is recursion? Is it possible for a stored procedure to call itself or recursive stored procedure? How many levels of SP nesting is possible?

Recursion is method of problem solving where the solution is arrived at by repetitively applying the logic and solution to the subsets of the problem.

Transact-SQL supports recursion. So, yes it is possible for a stored procedure to call itself.

Stored procedures and managed code references can be nested up to 32 levels.

9. What are the advantages of using Stored Procedures?

- They help in reducing the network traffic and latency which in turn boosts application performance.

- They help in promoting code reuse.

- They provide better security to data.

- It is possible to encapsulate the logic using stored procedures. This allows to change stored procedure code without affecting clients.

- It is possible to reuse stored procedure execution plans, which are cached in SQL Server's memory. This reduces server overhead.

- They help in promoting code reuse.

- They provide better security to data.

- It is possible to encapsulate the logic using stored procedures. This allows to change stored procedure code without affecting clients.

- It is possible to reuse stored procedure execution plans, which are cached in SQL Server's memory. This reduces server overhead.

10. a.) What do you mean by an execution plan? Why is it used? How would you view it?

a.) An execution plan can be called as a road map that graphically or textually shows the data retrieval methods which have been chosen by the SQL

Server query optimizer, for a stored procedure or ad- hoc query.

b.) It is used because it is a very useful tool for a developer to understand the performance characteristics of a query or stored procedure.

c.) There exists an option called "Show Execution Plan" in Query Analyzer. If this option is turned on, it will display query execution plan in separate window when the query is run again.

Server query optimizer, for a stored procedure or ad- hoc query.

b.) It is used because it is a very useful tool for a developer to understand the performance characteristics of a query or stored procedure.

c.) There exists an option called "Show Execution Plan" in Query Analyzer. If this option is turned on, it will display query execution plan in separate window when the query is run again.

11. You want to implement the following relationships while designing tables. How would you do it?

a.) One-to-one

b.) One-to-many

c.) Many-to-many

a.) One-to-One relationship - can be implemented as a single table and rarely as two tables with primary and foreign key relationships.

b.) One-to-Many relationships - by splitting the data into two tables with primary key and foreign key relationships.

c.) Many-to-Many - by using a junction table with the keys from both the tables forming the composite primary key of the junction table.

b.) One-to-Many relationships - by splitting the data into two tables with primary key and foreign key relationships.

c.) Many-to-Many - by using a junction table with the keys from both the tables forming the composite primary key of the junction table.

12. Differentiate between DELETE and TRUNCATE.

- Truncate can not be rolled back while Delete can be.

- Truncate keeps the lock on table while Delete keeps the lock on each row.

- Truncate resets the counter of the Identity column while Delete doesn't do so.

- Trigger is not fired in Truncate while it happens in Delete.

- Truncate keeps the lock on table while Delete keeps the lock on each row.

- Truncate resets the counter of the Identity column while Delete doesn't do so.

- Trigger is not fired in Truncate while it happens in Delete.

13. What are the properties of the Relational tables?

Relational tables have six properties:

1. Values are atomic.

2. Column values are of the same kind.

3. Each row is unique.

4. The sequence of columns is insignificant.

5. The sequence of rows is insignificant.

6. Each column must have a unique name.

1. Values are atomic.

2. Column values are of the same kind.

3. Each row is unique.

4. The sequence of columns is insignificant.

5. The sequence of rows is insignificant.

6. Each column must have a unique name.

14. Explain the following.

a.) COLLATION.

Collation is a type of sort order. There are mainly three types of sort orders, namely:

i.) Dictionary case sensitive

ii.)Dictionary - case insensitive

iii.)Binary.

b.) Stored Procedure

- It is a set of T-SQL statements combined together to perform a single task formed by combining many small tasks.

- When you actually run a Stored procedure, a set of statements is run.

Collation is a type of sort order. There are mainly three types of sort orders, namely:

i.) Dictionary case sensitive

ii.)Dictionary - case insensitive

iii.)Binary.

b.) Stored Procedure

- It is a set of T-SQL statements combined together to perform a single task formed by combining many small tasks.

- When you actually run a Stored procedure, a set of statements is run.

15. What do you mean by ACID?

- ACID (Atomicity Consistency Isolation Durability) is a quality sought after in a reliable database. Here's the relevance of each quality:

- Atomicity is an all-or-none proposition.

- Consistency - it guarantees that your database is never left by a transaction in a half-finished state.

- Isolation - it keeps transactions separated from each other until they’re finished.

- Durability - it ensures that the database keeps a track of pending changes in a way that the server can recover from an abnormal termination.

- Atomicity is an all-or-none proposition.

- Consistency - it guarantees that your database is never left by a transaction in a half-finished state.

- Isolation - it keeps transactions separated from each other until they’re finished.

- Durability - it ensures that the database keeps a track of pending changes in a way that the server can recover from an abnormal termination.

16. Explain the following:

a.) Dirty pages.

These are the buffer pages that contain modifications which have not been written to disk.

b.) ETL - Extraction, Transformation, and Loading.

- It is the process of copying and cleaning data from heterogeneous sources.

- It is an important part of development projects for data warehousing and business intelligence.

These are the buffer pages that contain modifications which have not been written to disk.

b.) ETL - Extraction, Transformation, and Loading.

- It is the process of copying and cleaning data from heterogeneous sources.

- It is an important part of development projects for data warehousing and business intelligence.

17. Differentiate between a Local and a Global temporary table?

- A local temporary table exists only for the duration of a connection or, if defined inside a compound statement, for the duration of the compound statement.

- Global temporary tables (created with a double “##”) are visible to all sessions.

- Global temporary tables are dropped when the session that created it ends, and all other sessions have stopped referencing it.

- Global temporary tables (created with a double “##”) are visible to all sessions.

- Global temporary tables are dropped when the session that created it ends, and all other sessions have stopped referencing it.

18. Explain different types of Locks in SQL Server.

There are 3 kinds of locks in SQL Server

i.) Shared locks - they are used for operations which do not allow any change or update of data. For e.g. SELECT.

ii.) Update locks - they are used when SQL Server wants to modify a page. The update page lock is then promoted to an exclusive page lock before actually making the changes.

iii.) Exclusive locks - they are used for the data modification operations. For e.g. UPDATE, INSERT, or DELETE.

i.) Shared locks - they are used for operations which do not allow any change or update of data. For e.g. SELECT.

ii.) Update locks - they are used when SQL Server wants to modify a page. The update page lock is then promoted to an exclusive page lock before actually making the changes.

iii.) Exclusive locks - they are used for the data modification operations. For e.g. UPDATE, INSERT, or DELETE.

Subscribe to:

Comments (Atom)